Replication in Configuration Manager is talked about pretty frequently. It’s a pretty awesome feature, but also a pain at times. One of the reasons I find it to be so cool is that it takes advantage of a SQL feature called Change Tracking. This feature is the fundamental basis for replication – and is a SQL feature rather than a CM feature. There’s so much to talk about when it comes to change tracking that I doubt this will be the only blog I write about it. So, stay tuned for additional posts on the subject.

The purpose of this post isn’t to explain completely how change tracking works, but a bit of an overview is probably necessary:

Change Tracking Overview

When change tracking is enabled every change is recorded and kept for a defined period of time. These changes are stored in two different tables. There is a change table for the specific table (on which change tracking is enabled) which will contain the details about what has been changed. These tables are referred to as “side tables” and have a naming convention of “change_tracking_”. The second place where the changes are stored is in a table which contains all the changes for all tables. This table doesn’t contain the details but rather a pointer to the side tables of the changes. This table is called “syscommittab”. Note: a DAC connection is required to look into these internal tables unless all you’re looking for is a row count.

The side tables and syscommittab need to be cleaned up routinely based on the retention period defined for change tracking. SQL takes care of this cleanup as part of background tasks/maintenance. Specifically, (as far as I know), there is an automatic background thread which triggers every 30 minutes to cleanup the side tables. When this is kicked off it will delete up to 5,000 records at a time. The syscommittab records aren’t handled by the same background task but rather when a CHECKPOINT is issued. When this is triggered up to 10,000 records will be deleted at a time. This means that SQL takes care of all the storing of changes as well as maintaining the cleanup and neither you nor CM needs to do any maintenance. However…that isn’t entirely true. That’s how it’s supposed to be, but alas, it isn’t.

The Issue

For some reason (or reasons?), there are times when the cleanup just doesn’t happen. Or, if it happens not enough is performed. You may see this as not really a big deal because who cares if a table is a little larger or storing data longer than we really want to right? Well, the problem is, if these tables get large and aren’t cleaning up correctly (thus they keep growing) replication can be greatly impacted, and that means the entire system will be impacted negatively. Replication (DRS/RCM) uses these tables to determine the changes which need to be replicated or sent to the other sites. So when the change tables are really big this process takes longer. And, because these same tables must be written to every time there is a change and the system is still writing changes – from replication information from other sites as well as processing the data from clients/MPs/etc – there is more contention. Writes take longer. Reads take longer. And, Deletes take longer…if they happen at all. So, the whole system is impacted and many times this is the start of a spiral – since the tables just get worse and worse so do the reads and writes.

If you have a hierarchy and haven’t hit this issue then count yourself lucky, but you should at least know about the potential issue if not plan for it. If you’re in the 100k+ club then you more than likely have this issue even if you don’t know it. And, if you have hit this issue then I’m guessing you’re reading this in hopes that I have an answer. Well, I believe I do. Actually, I think I have multiple answers, but I’m just going to talk about one solution in this post.

In my experience I’ve not had much of an issue with the side tables not getting cleaned up, but rather with syscommittab. So, this solution is specifically addressed at syscommittab.

The SQL team added a stored procedure which can be used to manually cleanup syscommittab in the same way a CHECKPOINT does. This sproc is named “sp_flush_commit_table_on_demand” and was a promising addition to being able to manage syscommittab. The problem is…it only works sometimes. Yeah, that’s right. After looking into this over and over it looks as though whatever issue there is with the CHECKPOINT logic also exists in this stored procedure. And at this point, it looks like the issue is with the “safe_cleanup_version” that is returned in the sproc.

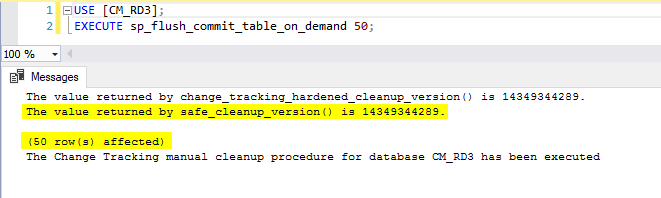

When this sproc is run it gets a couple of values (cleanup versions) and then starts to cleanup syscommittab based on those values. The output/results of running this sproc should look something like this (Note: you can provide a number of records to cleanup or leave that off to clean all records, in this example I used “50” to just cleanup 50 records):

As you can see, it did in fact cleanup 50 records as I asked it to. The reason for that is because it was able to return a value for the “safe_cleanup_version” (and there were records that needed cleaning up). However, the majority of the time (in my experience anyway) this sproc doesn’t return a value for the safe_cleanup_version and instead returns “0” and therefore doesn’t do anything regardless of how many records are needing cleanup. This is generally what I see returned (especially when there is an issue with syscommittab):

I’ve got a case open for this so hopefully there will be a fix to this in the future which would make manual intervention unnecessary. But, until then I’ll be using manual methods to make sure it’s cleaned up. I mentioned above that I have more than one way of taking care of the cleanup – a while back CSS wrote a stored procedure which I then modified and began using. I recently discovered an issue with the procedure’s logic for cleaning up – I had misunderstood (and apparently CSS did too) how the cleanup versions (or watermarks) were supposed to be used in the cleanup. So, the sproc doesn’t work as often as it should or as well as it should. I’ve started to fix that script and perhaps will share that in a future post when it is completed.

I’ve got a case open for this so hopefully there will be a fix to this in the future which would make manual intervention unnecessary. But, until then I’ll be using manual methods to make sure it’s cleaned up. I mentioned above that I have more than one way of taking care of the cleanup – a while back CSS wrote a stored procedure which I then modified and began using. I recently discovered an issue with the procedure’s logic for cleaning up – I had misunderstood (and apparently CSS did too) how the cleanup versions (or watermarks) were supposed to be used in the cleanup. So, the sproc doesn’t work as often as it should or as well as it should. I’ve started to fix that script and perhaps will share that in a future post when it is completed.

The Solution

So what’s this post’s solution? Well, it’s to use the internal stored procedure to perform the cleanup by running the sproc as many times as it takes until it finds the safe_cleanup_version and performs the cleanup. Pretty simple really. However, in my experience, it can sometimes be very difficult to get the safe_cleanup_version to return anything but “0”. Therefore, I wrote a PowerShell script to execute the sproc until it does return a value and cleans up.

I am using PowerShell because that was the easiest way I could think of to programmatically check if a value was returned and to capture the number of “row(s) affected”. This is because the lines returned by the sproc are the results of PRINT commands and the “row(s) affected” line(s) only exist if NOCOUNT is off. For example, here’s the result of the sproc when NOCOUNT is explicitly turned off (compare to the previous screenshot):

Notice how there are no lines for “row(s) affected”? That’s because that isn’t something displayed due to the sproc but rather the system – the record count from the statement completion. I don’t know of an easy way in SQL to capture PRINT statements or the rows affected from a stored procedure (you don’t write/modify yourself that is). But, by using PowerShell and adding some event handlers I can grab both of these things. And, since I have to capture and parse the output to determine if I need to try running the sproc again or not this was the simplest solution I thought of. And, the greatest benefit of this is that it still uses the internal SQL sproc to perform the work – so it’s the recommended way to perform syscommittab cleanup! Lastly, this doesn’t require you to be connected to the DAC so that’s another added benefit.

One thing to know about this internal sproc is that it only deletes a maximum of 10,000 records at a time – so if there are a million records to cleanup it will delete them in batches of 10 thousand (as long as you haven’t told it to delete less).

The script (for automation)

The script is parameterized so you should be able to use it as is just by passing in your values/desires. Here’s a quick review of the parameters and their explanations:

| Parameter | Mandatory? | Default Value | Notes/Explanation |

| ServerName | Y | None | Provide the SQL Server name (and Instance Name if not a default instance) |

| DatabaseName | Y | None | Provide the Database Name |

| LogDirectory | N | The directory where the PS script is located | The directory in which to write the log file |

| LogFileName | N | SqlSysCommitTabCleanup.log | The name of the log file. This must end with ‘.log’. |

| LogTableName | N | DBA.dbo.CommandLog | The 3 part name of the CommandLog table (i.e.: database.schema.table) |

| MaxIterations | N | 1800 | The number of times to try to cleanup before stopping the script (waits a second between trials); 1800 = 30 min (if all trials don’t find a safe cleanup) |

| ConnectionTimeout | N | 120 (2 min) | How long to wait for a connection timeout (in seconds). Note: The max is 20 minutes. |

| CommandTimeout | N | 172800 (48 hours) | How long to let the SQL script execute before stopping (in seconds). |

| TotalRecordsToDelete | N | Null (aka, delete all) | The total number of records to delete. Note: if this is not specified then all records will be deleted |

| LogToFile | N | TRUE | If this is set to true then a log file will be created and logged to |

| LogToTable | N | TRUE | If this is set to true then summary info will be logged to a table |

| VerboseLogging | N | FALSE | If this is set to true then Verbose information will be output |

The first thing I want to call out is that if you are going to log to a table you need to make sure to update the parameter “LogTableName” to the full name of the table in which you want to log. The problem with this is that even if you properly enter a logging table the script will only work if that table contains the following fields (and doesn’t require any other values):

| Field | datatype | Allow Nulls? | Notes |

| ID | int | No | Must be unique; best if this is the primary key and/or an identity column |

| DatabaseName | sysname | Yes | |

| ExtendedInfo | xml | Yes | |

| Command | nvarchar(max) | No | |

| CommandType | nvarchar(60) | No | |

| StartTime | datetime | No | |

| EndTime | datetime | Yes | |

| ErrorNumber | int | Yes | |

| ErrorMessage | nvarchar(max) | Yes |

This is the case because I’m assuming you’re using at least one of Ola Hallengren’s solution for maintenance activities and that you have his “CommandLog” table installed. If you don’t you can download just the table definition at https://ola.hallengren.com/scripts/CommandLog.sql or create your own table with the above fields (and definitions).

The other thing I’ll mention about the script is that if it starts to cleanup and is deadlocked the records that were cleaned up are still counted as the total records deleted AND the script handles the deadlock and keeps trying. I chose to only allow it to hit 5 deadlocks before stopping the script. So, if it runs and hits a few deadlocks that is okay, it’ll keep cleaning up and will even tell you how many times it was deadlocked as well as provide the total count of deleted records!

An example of how to call this PowerShell script is:

.CleanupSyscommittabWithInternalSproc.ps1 -ServerName “SomeSqlSrvr” -DatabaseName “CM_123” -VerboseLogging $true -LogTableName “SomeDB.dbo.CommandLog”

The thing I love about this solution is that you can create a SQL job or a scheduled task to call this script and just check your logged records for information rather than run it manually from time to time.

I need to give a shout out to Sherry Kissinger and Brian Mason for their willingness to help test this out. And, as you’ll notice in the script history, Sherry is responsible for adding the initial logging to file logic. Thanks Sherry and Brian!! (you can read their blogs at https://mnscug.org/blogs/sherry-kissinger and https://mnscug.org/blogs/brian-mason).

The script can be found here: https://1drv.ms/u/s!AmzqhVted60bxFdjcJUTVPJQY-bi

SQL for the table log

If you have the CommandLog table and are logging to the table you can use the following query to look at the log (and check the values stored in the xml). Just comment out or uncomment out the fields you’re interested in.

SELECT TOP 5 ID

,StartTime

,EndTime

,ErrorNumber

,ErrorMessage

–,ExtendedInfo.value(N'(/CleanupInfo/ScriptStart)[1]’,N’datetime’) AS [ScriptStart]

,ExtendedInfo.value(N'(/CleanupInfo/SysCommitTabRecordsAtStart)[1]’,N’bigint’) AS [SysCommitTabRecordsAtStart]

,ExtendedInfo.value(N'(/CleanupInfo/SysCommitTabRecordsAtEnd)[1]’,N’bigint’) AS [SysCommitTabRecordsAtEnd]

,ExtendedInfo.value(N'(/CleanupInfo/TotalSysCommitTabRecordsDeleted)[1]’,N’bigint’) AS [TotalSysCommitTabRecordsDeleted]

–,ExtendedInfo.value(N'(/CleanupInfo/RowsToDeletePerIteration)[1]’,N’int’) AS [RowsToDeletePerIteration]

,ExtendedInfo.value(N'(/CleanupInfo/SafeCleanupVersion)[1]’,N’bigint’) AS [SafeCleanupVersion]

,ExtendedInfo.value(N'(/CleanupInfo/NumberOfDeadlocks)[1]’,N’smallint’) AS [NumberOfDeadlocks]

,ExtendedInfo.value(N'(/CleanupInfo/NumberOfIterations)[1]’,N’bigint’) AS [NumberOfIterations]

–,ExtendedInfo.value(N'(/CleanupInfo/DeadlockMessage)[1]’,N’nvarchar(2000)’) AS [LastDeadlockMessage]

,ExtendedInfo.value(N'(/CleanupInfo/DeadlockTime)[1]’,N’datetime’) AS [LastDeadlockTime]

–,ExtendedInfo.value(N'(/CleanupInfo/EndTime)[1]’,N’datetime’) AS [EndTime]

–,ExtendedInfo.value(N'(/CleanupInfo/CheckRunnabilityIssues)[1]’,N’varchar(2000)’) AS [CheckRunnabilityIssues]

,ExtendedInfo.query(N’/CleanupInfo/InputParameterValues’) AS [InputParameterValues]

–,ExtendedInfo

FROM DBA.dbo.CommandLog

WHERE CommandType = N’SYSCOMMITTAB_POSH_MAINTAINER’

ORDER BY ID DESC;

GO

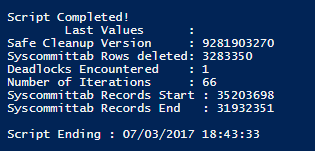

Here’s a sample of a recent log entry from the query above (this doesn’t show all columns as this is just a sample of the important info). As you can see, it had to run the sproc 66 times before it was able to complete the cleanup. And…it encountered 1 deadlock so I guess it technically was able to cleanup twice out of the 66 tries.

Here’s a sample of the final output in the “host”:

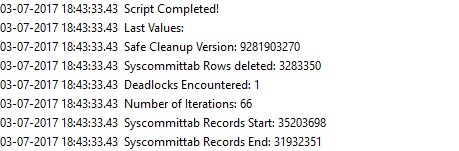

And, here’s a sample of the final output in the log file (in cmtrace of course):

Hope this helps!

Hi Benjamin,

Thanks for this post – I’ve been searching high and low for a robust method to clean up syscommittab for awhile, and this sounds perfect. A few quick questions: when I run the script with PowerShell (entering server and DB name at the prompts), it only ever seems to run 1 iteration and then stops. This is the output I get:

23-02-2018 17:18:03.18 Script Starting

23-02-2018 17:18:03.18 Current Total Syscommittab Records = 148962939

23-02-2018 17:18:03.18 No ScopeId returned so we can’t continue to try and log to a table any more!

23-02-2018 17:18:04.18 Script Completed!

23-02-2018 17:18:04.18 Last Values:

23-02-2018 17:18:04.18 Safe Cleanup Version: 156972007

23-02-2018 17:18:04.18 Syscommittab Rows deleted: 0

23-02-2018 17:18:04.18 Deadlocks Encountered: 0

23-02-2018 17:18:04.18 Number of Iterations: 1

23-02-2018 17:18:04.18 Syscommittab Records Start: 148962939

23-02-2018 17:18:04.18 Syscommittab Records End: 148962939

What am I doing wrong that it doesn’t iterate? The second question has to do with creating the SQL Agent job. I created a job with a PowerShell step and the following command:

cd ‘C:FOLDERPATH’

.CleanupSyscommittabWithInternalSproc.ps1 -ServerName “SERVERNAME” -DatabaseName “DBNAME” -VerboseLogging $true -LogTableName “Warehouse.dbo.CommandLog”

When I try to run the agent job, I get the following error: The error information returned by PowerShell is: ‘Exception setting “ForegroundColor”: “Cannot convert null to type “System.ConsoleColor” due to enumeration values that are not valid. Specify one of the following enumeration values and try again. The possible enumeration values are “Black, DarkBlue, DarkGreen, DarkCyan, DarkRed, DarkMagenta, DarkYellow, Gray, DarkGray, Blue, Green, Cyan, Red, Magenta, Yellow, White”

Any tips on how to resolve? Thanks again for your time!

LikeLike

Unless you accidentally deleted one of the values in the script you shouldn’t get that. If you know you haven’t changed anything in the script then I’d suggest changing your command in the step to the following:

PowerShell C:FOLDERPATHCleanupSyscommittabWithInternalSproc.ps1 -ServerName “SERVERNAME” -DatabaseName “DBNAME” -VerboseLogging $true -LogTableName “Warehouse.dbo.CommandLog”

That way you know you’re using the ‘full version of PS’ rather than sqlps and you’ll be able to see if that is the issue or not. If it still happens…double check that each “ForegroundColor” in the script has a value after it. However, if you run it manually and don’t see that error then it should be fine.

LikeLike

Thanks, using PowerShell C:FOLDERPATHCleanupSyscommittabWithInternalSproc.ps1 -ServerName “SERVERNAME” -DatabaseName “DBNAME” -VerboseLogging $true -LogTableName “Warehouse.dbo.CommandLog” worked! Also, after looking through the verbose logs , I realized that even though the “safe cleanup version” had a value that you’d expect deletes to occur, the “change_tracking_hardened_cleanup_version” still had a very old number. I think these watermarks are unreliable in our environment b/c we turned off auto clean-up due to contention issues (that’s the whole reason I’m doing this investigation – we’re trying to create our own process to manually clean up change tracking on our schedule, to avoid contention on tables with CT). I turned on auto clean-up before beginning these tests, but it seems like it can take (at least) several hours before the watermarks are updated.

In response to your other comment: I’m not familiar with CM (does CM = config manager?). In any case, I opened a new query window from a DAC connection and tried to reference spDiagChangeTracking but it didn’t work – so I don’t think my company has/uses CM.

So, as a follow up question: do you know of a way to force the watermarks to update? I think a process that would work for us is to create an agent job that does the following:

1) temporarily turn on auto clean up so that watermarks can be refreshed

2) figure out a way to force watermarks to “refresh” (or possibly wait several hours, if we have to 😦 )

3) run the powershell script

4) Turn auto clean-up back off

And run this process on a scheduled interval. Thanks for your help!

LikeLike

You got in the spam folder again no idea why. CM = ConfigMgr. You’d have to connect to the DAC and then run “USE CM_XYZ” (replacing XYZ with your sitecode) first.

I’m on my phone so I’ll have to re read this all later and get back to you.

LikeLike

Hi Benjamin,

Thanks for this post – I’ve been searching high and low for a robust method to clean up syscommittab for awhile, and this sounds perfect. A few quick questions: when I run the script with PowerShell (entering server and DB name at the prompts), it won’t delete anything in my scenario. The log shows the following:

26-02-2018 08:12:04.12 Script Starting

26-02-2018 08:12:05.12 Current Total Syscommittab Records = 148962939

26-02-2018 08:12:05.12 No ScopeId returned so we can’t continue to try and log to a table any more!

26-02-2018 08:12:14.12 Script Completed!

26-02-2018 08:12:14.12 Last Values:

26-02-2018 08:12:14.12 Safe Cleanup Version: 294788369

26-02-2018 08:12:14.12 Syscommittab Rows deleted: 0

26-02-2018 08:12:14.12 Deadlocks Encountered: 0

26-02-2018 08:12:14.12 Number of Iterations: 9

26-02-2018 08:12:14.12 Syscommittab Records Start: 148962939

26-02-2018 08:12:14.12 Syscommittab Records End: 148962939

If I query the syscommittab using DAC, I can see there are millions of records with a commit_ts value less than the safe cleanup version of 294788369, so I’m not sure why they won’t delete. Any suggestions or pointers would be greatly appreciated!

LikeLike

Hi Chris, Thanks! Your comments went directly to the spam filtered items for some reason – I happened to just check the spam folder and saw your two legitimate comments in there! Glad I found them. 🙂

So, the reason the script doesn’t iterate or clean anything up is because the sproc is returning a valid “Safe Cleanup Version”. By valid I mean it isn’t 0. That means SQL actually thinks it’s up to date. This is why I named this “part 1” because I had a feeling I’d have to write another one using other methods to clean it, which may or may not be fully sanctioned. 🙂

Are you using CM? If so, I believe there is a newer sproc that was added that may work/be better than what was previously included in CM – I’ve been meaning to check it but I believe it is very similar to the one I created before creating this PowerShell script (so I could say I was doing the approved/sanctioned method to CSS). I’d share mine but I know it needs a little work so would prefer to fix it a little before sharing, but will if you’re in a pinch. First let me see the name of the CM sproc and do a quick check of it…

Okay, yeah I found it. It’s pretty dang close to what I wrote before writing the PS code. I’d give it a try (I expect it will take a long time); try running this: “EXECUTE spDiagChangeTracking 1, 0, 100000;” You will have to run that from a DAC connection though. You can review the progress of it via a different connection by running “SELECT * FROM ##SPDiagCleanupCT;”.

LikeLike

Hey Benjamin! The normal EXEC spDiagChangeTracking @CleanupChangeTracking = 1 doesnt cleanup my syscommittab table which led me to your blog. I am trying to run your script in my lab on SQL2012R2 because i have change tracking data that is 18 days old in the syscommitttab table which flags a prerequisite warning when updating SCCM. I get the following error when I run this script

**************************************

Exception Caught in ‘CheckRunnability’ function. Error Message:

Exception calling “ExecuteScalar” with “0” argument(s): “Invalid column name ‘is_primary_replica’.”

Server/Database not ‘runnable’ for the following reason(s): Exception calling “ExecuteScalar” with “0” argument(s): “Invalid column name ‘is_primary_replica’.”

Any ideas?

LikeLike

PS. My SQL knowledge is poor…but I have no database replications thus no values in sys.dm_hadr_database_replica_states.

Maybe the script isnt intended for my issue..

LikeLike

Hmmm…the script is intended to work regardless of that. However, it sounds like it’s an issue with schema and your version of SQL (but I could be wrong). I can’t look at it today but I’ll take a look tomorrow and see what I can find/do.

LikeLike

Yup, I was right. That column is only available as of SQL Server 2014. My bad. Let me come up with an updated version to account for older versions of SQL Server…

LikeLike

Okay, the script is updated. Hope that helps!

LikeLiked by 1 person

Hey Benjamin, the SP in SCCM 1906 seems to be doing the trick. I have looked at the SP code and there is definitely better handling around failed cleanups (I have updated my blog post to reflect this). I’ll revert some snapshots and test your modified script too when I get a sec. Thanks for your time on this, much appreciated!

LikeLike

You sir, are a splendid human being. I’ll check it out! Garth Jones said the SP will now run auto cleanup in SCCM 1906! I have asked for more details because this suggests there was a code issue there too.

LikeLike

That’s not entirely accurate. The SP now better accounts for when autocleanup has issues. But it doesn’t ‘run auto cleanup’. That’s still managed by SQL and dark magic.

LikeLiked by 1 person

Sorry I misquoted you Garth, combination of too much BBQ and sunshine today 🙂

LikeLiked by 1 person

Hi,

Our change tracking keeps going back to FALSE. We’ve changed it back to TRUE twice now. How come this happens and what can we do to prevent it?

LikeLike

I’m not sure I understand exactly what’s happening. Are you saying CT just gets disabled automatically?!

LikeLike

Hi Benjamin – We can really use a solution for cleaning up the commit table; unfortunately, your script (nor any other solution) will work with a SQL 2008 SP2 instance. It is a long story why we’re still using that antiquated version, but it is what it is. sp_flush_commit_table_on_demand wasn’t introduced until (I think) 2016. We do have sp_flush_commit_table, but that is what’s used by the scheduled job, and it’s gone nowhere for many months. Any ideas on what we can do about cleanup on our environment?

LikeLike

Hey Nick! Are you running Configuration Manager?

LikeLike

After an issue with one of my Configuration Manager database, followed the procedure from this KB and all side tables were cleaned, but not sys.syscommittable.

Your script did the trick! Thank you sir!

https://support.microsoft.com/en-us/topic/kb4538365-fix-change-tracking-cleanup-does-not-work-when-invalid-cleanup-and-hardened-cleanup-version-are-negative-in-sql-server-2017-and-2019-f186bd92-622c-89f2-a6c2-739c250593f2

LikeLiked by 1 person